At Threadfin, we have the very best technical experts on our team—many of whom are also good technical writers with a passion for sharing their knowledge. Our technical blog focuses on tips, tricks and potential gotchas from the best IT engineers in the industry.

This article dives deep into Azure-based Load Balancers—how to configure them and a potential gotcha they present if configured improperly.

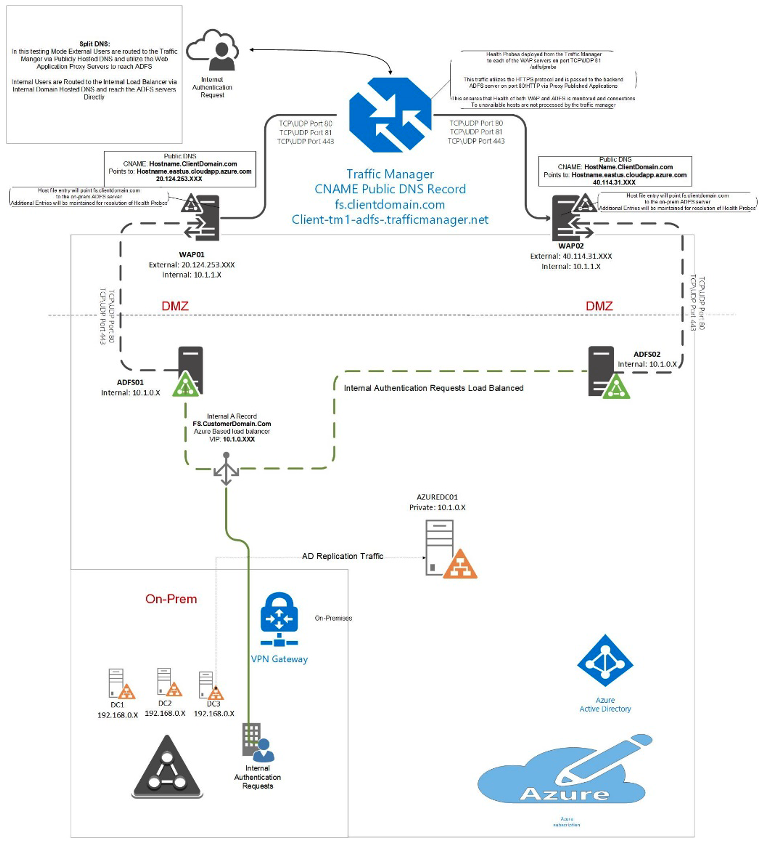

This situation arose while deploying a rather complex ADFS infrastructure involving a two arm Architecture with an Azure Traffic Manager and an Internal Load Balancer. With the entire infrastructure hosted in Azure, deployment should be a breeze, right? Not really…

As per the design, authentication requests to ADFS are handled via two paths, “Internal” and “External,” with redundant servers on both the frontend and backend. As with most ADFS deployments, the path selection is determined by DNS.

Internal users will utilize Internal DNS servers which point FS.clientdomain.com to the Internal Load Balancer of the ADFS servers. These load balancers are configured to pass traffic on Ports 80 and 443. Port 80 is used for a health probe and Port 443 is used for authentication traffic.

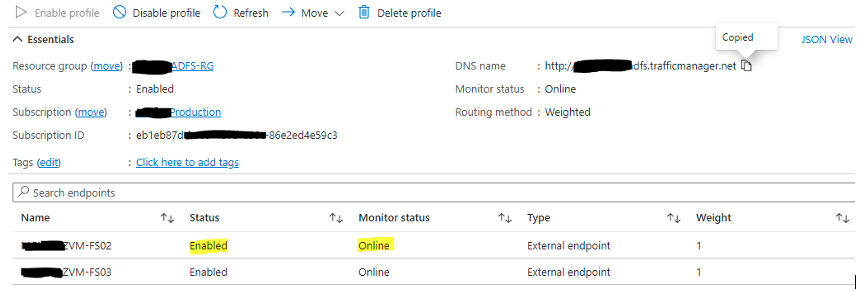

External users resolve DNS via publicly facing DNS servers which are configured to point the FS name for the client domain to the Azure-based Traffic Manager. Traffic managers are essentially a highly available DNS routing mechanism that performs health checks on the backend services. They load balance traffic based on profiles set within the Azure environment and ensure that traffic requests are only sent to nodes that report as online. To ensure that the health probes work for both the frontend service “Web Application Proxy” and the backend service “ADFS” you must enable ports 81, 80 and 443. Port 81 probes the WAP with HTTPS traffic which gets passed to the backend ADFS server on Port 443. This ensures that the traffic manager can respond appropriately if any portion of the ADFS leg goes down. If the WAP is not accessible, the probe will fail because the traffic is not relayed. If the ADFS server goes down, the probe will fail because the appropriate responses are not generated.

After deploying this design, it appeared to work beautifully. I was able to authenticate to any Azure AD-integrated application without issue. I could take down any leg or multiple nodes of the configuration without fault. As long as at least one WAP and one ADFS server was available, authentication was still successful. I was delighted to share my results with the client and move this design into production.

Upon moving the new fault-tolerant design into production via DNS updates, users were immediately greeted with authentication failures to mission-critical applications which were not Azure AD integrated, but instead relied solely on the ADFS infrastructure to pass authentication. One clue I had to help track down the issue was that the authentication requests would work so long as the users were not required to use MFA. Another clue was that all authentication to Azure AD integrated applications was successful regardless of MFA requirements.

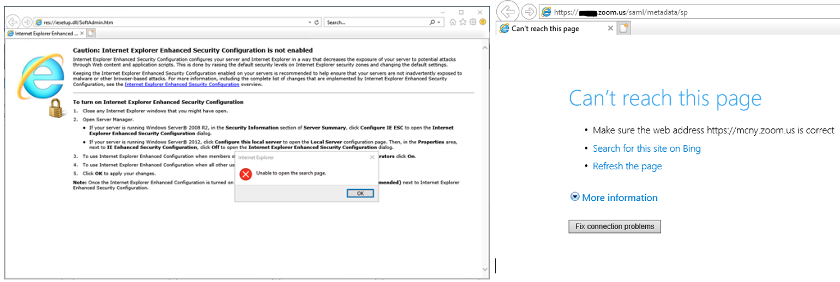

Investigating the problem revealed that the newly deployed ADFS servers in Azure did not have outbound internet connectivity. However, the Web Application Proxies did. Noting the differences between the servers, I assumed it had something to do with Group Policy or other security measures placed on the domain joined servers. Web Application Proxies are not domain-joined so would not receive any of these policies.

I dug into GPOs, security settings on various hardening applications, firewalls and Azure Network Security groups. Nothing I found would result in blocking outbound internet requests. However, the behavior was very apparent.

After much research—and some head scratching—I came across an article that mentioned a similar issue for servers placed behind an Azure Internal Load Balancer. I had dismissed the load balancer as a potential issue early on because MFA authentication worked for applications which used Azure AD. Also, a load balancer only handles incoming traffic destined for the frontend VIP configured on it, right? Wrong! Here’s what I found:

- Azure AD-integrated apps continued to work because the MFA prompt was being handled by Azure AD itself and not relying on ADFS to pass the MFA request. Because of this, the outbound internet connection on the ADFS servers was not required.

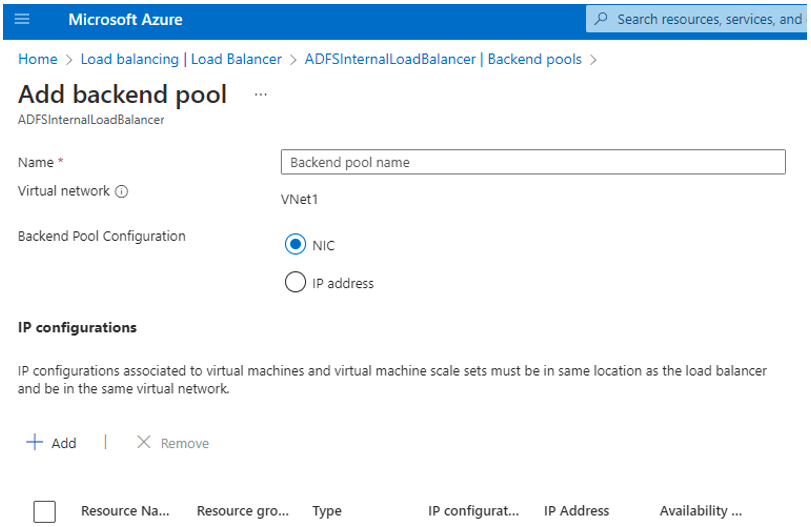

- Outbound internet traffic from the ADFS servers behind the Internal Load Balancer was being blocked by default due to the load balancer being configured by NIC (Azure Resource Selection) rather than manually by IP. Heading 3 in this Microsoft article shows a solution to this problem. Adding a public IP to the backend servers will also restore internet connectivity, but it’s less appealing than just changing the configuration of the load balancer backend pool. After discovering this as an issue, it becomes much easier to explore the topic around it and a simple Google search for “Azure VMs can’t access internet behind load balancer” reveals countless articles detailing the problem.

It boils down to this: in Azure you can configure the Internal Load Balancer in two ways: via the NIC (Azure Resource Selection) or via IP. If configured via IP the load balancer operates in the traditional manner and only affects traffic destined for the Frontend VIP. However, if you use the very intuitive and easy interface to select the NIC from the available NICs you’re also granting the load balancer additional access rights to the resource. And once granted, the load balancer begins using those rights to apply additional security to the resource. Namely, it shuts down all outbound traffic unless it is explicitly allowed via outbound load balancing rules. The very unfortunate reality is that configuring outbound rules is not a feature available to the “standard” sku of the Azure Load Balancer. There is no notification presented stating that you could inhibit internet access from your VM and no indication that outbound rules are needed when using the standard SKU.

The solution to this (very frustrating) problem was to modify (Delete/Recreate) the backend pool to be IP based. Immediately after making this change, the two ADFS servers were once again able to access the internet and MFA prompts began working for ADFS Relaying Party Trusts as expected.

Delivery Excellence is our heart. With a national footprint and global delivery, Threadfin has provided delivery excellence for 20 years. Threadfin is a Microsoft Gold and AWS Advanced Consulting Partner and we leverage those partnerships to achieve business outcomes. Through each technology shift, we maintain our position as trusted digital transformation experts and advisors.

Contact us today.